Understanding Deepfake Technology: Implications and Solutions

In today’s digital age, the rapid advancement of artificial intelligence (AI) has brought about a revolutionary technology known as deepfakes. This technology leverages AI and machine learning to create highly realistic but entirely synthetic media. While deepfakes can be used for entertainment and artistic purposes, they also pose significant ethical, security, and societal challenges. This article explores the intricacies of deepfake technology, its implications, and potential solutions to mitigate its risks.

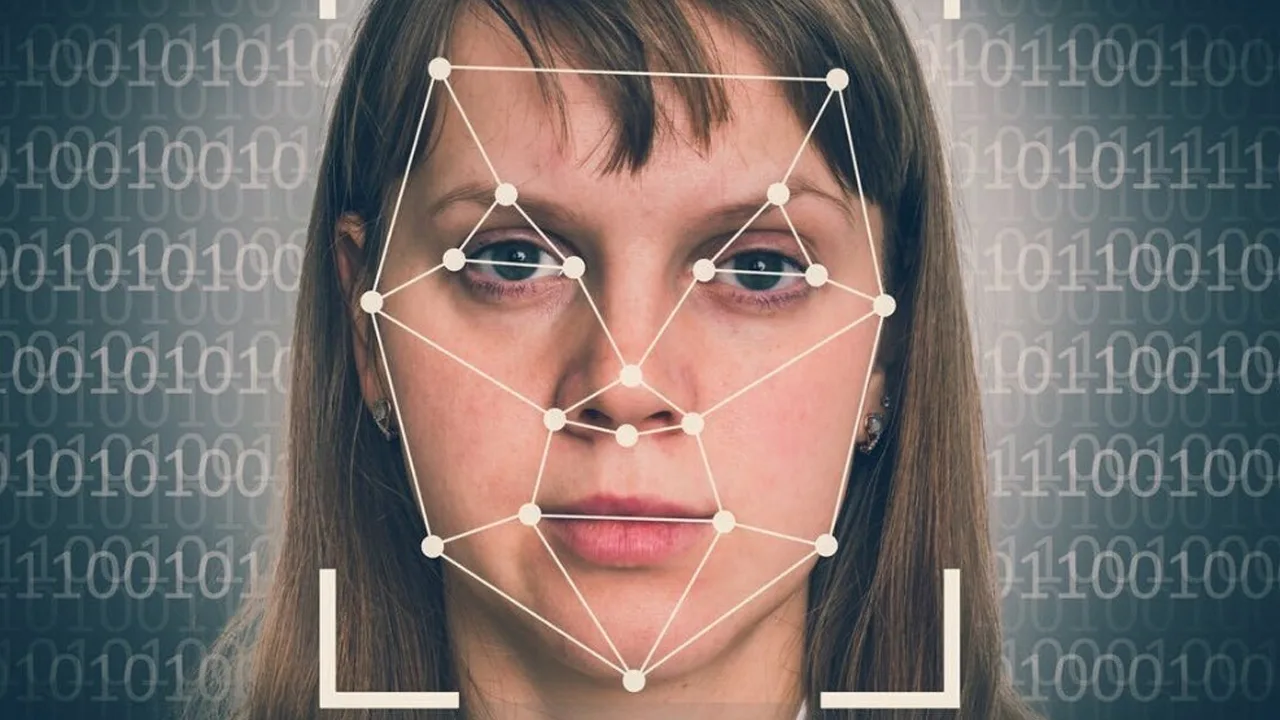

Deepfakes are synthetic media created using AI algorithms, particularly deep learning techniques. These techniques involve training neural networks on large datasets of real media to generate realistic images, videos, or audio recordings. The term "deepfake" is derived from "deep learning" and "fake," reflecting the technology's core principles.

Deepfake technology typically employs Generative Adversarial Networks (GANs), a class of machine learning frameworks. GANs consist of two neural networks: the generator and the discriminator. The generator creates fake media, while the discriminator evaluates their authenticity. Through iterative training, the generator learns to produce increasingly convincing forgeries that the discriminator struggles to distinguish from real media.

Deepfake technology typically employs Generative Adversarial Networks (GANs), a class of machine learning frameworks. GANs consist of two neural networks: the generator and the discriminator. The generator creates fake media, while the discriminator evaluates their authenticity. Through iterative training, the generator learns to produce increasingly convincing forgeries that the discriminator struggles to distinguish from real media.

The Rise of Deepfakes

Deepfake technology gained prominence around 2017 and quickly captured public attention. Initially, deepfakes were primarily used in entertainment, such as swapping faces in movies or creating realistic avatars for video games. However, the potential for misuse soon became evident, raising concerns about the technology's darker applications.

Implications of Deepfake Technology

Combating Deepfakes: Potential Solutions

Addressing the challenges posed by deepfakes requires a multi-faceted approach involving technological, legal, and societal measures.

Technological Solutions: Developing advanced detection algorithms is crucial in the fight against deepfakes. Researchers are working on AI tools that can identify subtle inconsistencies in deepfake media, such as unnatural facial movements, mismatched audio-visual cues, and irregular lighting patterns. Collaboration between tech companies, academic institutions, and governments can accelerate the development and deployment of these detection technologies.

Regulation and Legislation: Governments must enact robust laws and regulations to address the creation and distribution of malicious deepfakes. Legal frameworks should include penalties for deepfake creators and platforms that facilitate their dissemination. For instance, the Deepfake Report Act, passed by the U.S. Senate in 2019, mandates the Department of Homeland Security to assess the impact of deepfake technology and recommend measures to mitigate its risks.

Public Awareness and Education: Raising public awareness about deepfakes is essential to prevent their misuse. Media literacy programs should teach individuals how to critically evaluate digital content and recognize potential deepfakes. Public awareness campaigns can also highlight the ethical implications of creating and sharing deepfake media.

Industry Standards and Best Practices: The tech industry should establish standards and best practices for the ethical use of deepfake technology. Platforms that host user-generated content must implement stringent measures to detect and remove deepfake media. Transparency in the development and deployment of AI technologies can help build public trust.

Collaboration and Research: International cooperation and information sharing are vital in combating deepfakes. Governments, tech companies, and research institutions should collaborate to develop effective countermeasures and stay ahead of emerging threats. Funding for research into deepfake detection and prevention should be prioritized.

The Future of Deepfake Technology

As AI and machine learning continue to advance, deepfakes are likely to become more sophisticated and harder to detect. The line between real and synthetic media will blur, posing ongoing challenges for society. However, with proactive measures and collective efforts, it is possible to harness the benefits of deepfake technology while mitigating its risks.

Innovations in deepfake technology also hold promise for positive applications. For instance, in the entertainment industry, deepfakes can revolutionize visual effects and create realistic virtual actors. In education, deepfake technology can be used to create immersive learning experiences. In healthcare, deepfakes can assist in medical simulations and training.

Innovations in deepfake technology also hold promise for positive applications. For instance, in the entertainment industry, deepfakes can revolutionize visual effects and create realistic virtual actors. In education, deepfake technology can be used to create immersive learning experiences. In healthcare, deepfakes can assist in medical simulations and training.

Conclusion

Deepfake technology represents a significant milestone in the field of artificial intelligence, offering both opportunities and challenges. While the potential for misuse is considerable, the development of robust detection tools, legal frameworks, and public awareness initiatives can help mitigate the risks associated with deepfakes. By fostering collaboration and research, society can navigate the complexities of this technology and ensure its ethical and beneficial use. As we move forward, balancing innovation with vigilance will be key to preserving trust and integrity in the digital age.